I think I saw the future of headphone technology at the recent 2019 Audio Engineering Society International Conference on Headphone Technology, held in San Francisco from August 27 to 29. My vision came during a presentation by Ramani Duraiswami, a professor at the University of Maryland and also president and founder of VisiSonics, a company dedicated to 3D audio reproduction, mostly for gaming applications. Duraiswami’s presentation didn’t awaken me to any concepts I hadn’t heard of before -- but it did give me the faith that the processing needed to make headphones sound as good and natural as a high-quality set of stereo (or surround) speakers is within our grasp.

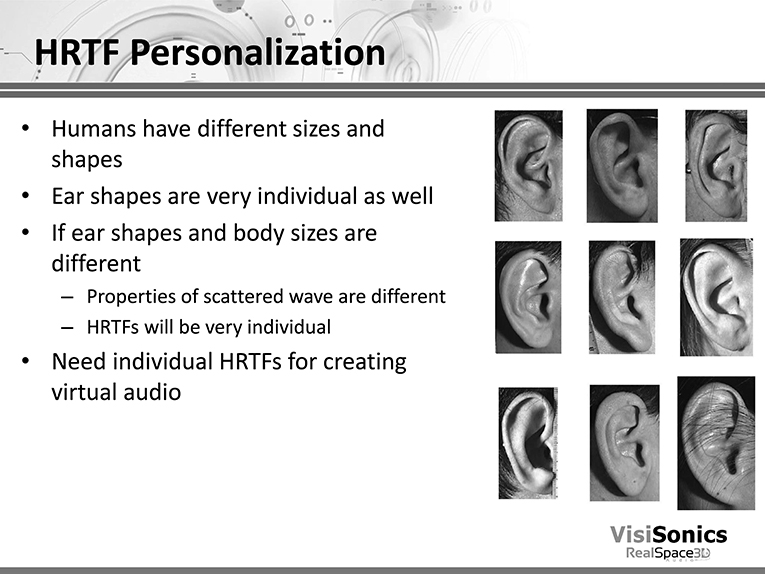

Duraiswami’s presentation explored methods for getting 3D sound reproduction from headphones. As he explained, the crux of the problem lies in the fact that we localize sounds based on a number of physical factors, including the shape of the pinnae, as well as the size and shapes of the head and shoulders. Our brains evaluate the way the reflections and frequency-response alterations caused by these physical factors differ from the direct sound. It’s a process called head-related transfer function, or HRTF. Headphones and earphones bypass our HRTFs, which is why center images from headphones seem to come from inside our heads. By precisely replicating a person’s HRTF using digital processing, it’s possible to restore a natural sense of spatiality and directionality when listening through headphones or earphones.

The problem is that just as everyone’s physical characteristics are different, so are their HRTFs. I tackled this topic in my June 2016 column that described the Smyth Realiser A8 headphone processor, which uses tiny microphones inserted into the ears to measure the listener’s HRTF. The result was the most realistic sound I’ve heard from headphones since the late 1990s, when I’d had my HRTF measured by a company named Virtual Listening Systems.

We’re talking a huge leap in realism here -- even more dramatic than you’d get by going from a good set of $100 headphones to the multi-thousand-dollar models from Focal, HiFiMan, and Meze. Basically, headphones with accurate, personalized HRTF processing don’t sound like headphones at all. That impression of having the audio emerging from the center of your head disappears -- and the effect is far more natural and powerful than any “one size fits all” technology such as crossfeed circuits or Dolby Headphone can deliver.

Obviously, it’s wildly optimistic to assume that consumers will be willing to spend thousands for a Smyth Realiser, or to insert microphones into their ears and run a bunch of test tones through a set of speakers to calibrate the processor. And as Duraiswami pointed out, directly measuring HRTF -- a process that typically requires an anechoic chamber, a complicated array of speakers and/or microphones, and about an hour of time -- isn’t practical, either.

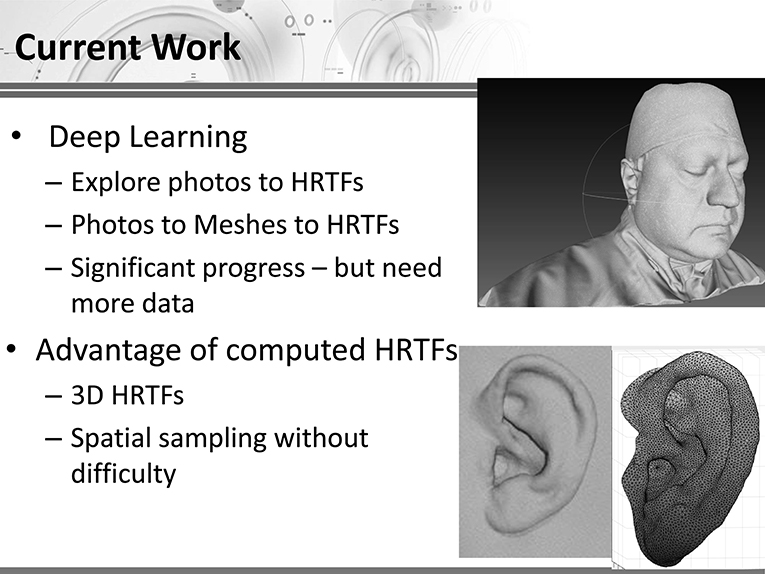

At CES in January, Creative Labs presented a potentially more practical solution: a smartphone app that lets you take pictures of your earlobes and your face, then calculates your HRTF and creates a processing algorithm that can fool you into thinking you’re hearing real speakers. The technology is called Super X-Fi, and it’s available in Creative’s Super X-Fi Amp. The CES demo was buggy to say the least, and they used insert microphones to create HRTF profiles for the listeners -- which led me to believe the photo-based Super X-Fi technology wasn’t reliable enough for a tradeshow demo. My post-CES attempts to shoot pictures of my ears and face and use the app to calculate my HRTF have all failed because the app is buggy and it refused to upload the data. The app also requires use of its embedded music player -- and few people will tolerate a second-rate interface for the sake of better sound, especially after they’ve been spoiled by the user-friendly interfaces of Spotify, Tidal, and Qobuz. So despite the fact that the Super X-Fi demo worked amazingly well for me for, literally, about ten seconds, I’m not sure if there’s a viable product here.

Duraiswami’s presentation convinced me that this technology has legs. He went deep into the details of how HRTFs are calculated -- an unbelievably complex process that demands a great deal of computer power -- and discussed how it was possible to do this through photos that show the user’s physical characteristics. Even though the processing would likely demand an impractical amount of time with a smartphone, Duraiswami said the calculations take only about four minutes on the Amazon cloud.

I think that if this technology can be made to work reliably, on a wide selection of affordable devices, it’ll be the most important development in the history of headphone sound since 1958, when John Koss invented stereo headphones. Active headphones, with built-in amps, wireless receivers, and digital signal processing (DSP), have taken over the market, and the best of these headphones may already have enough DSP power to do effective HRTF processing -- and even if they don’t, they probably will in a year or two. And then we’ll have sound quality and spatial realism that no traditional passive headphones and analog electronics can even approach, at prices that average people can afford. If we can get effective HRTF processing plus higher-quality noise canceling, we’ll be in for an exciting new generation of headphones.

. . . Brent Butterworth