I think I just heard the future of headphones -- and I wasn’t really even listening to headphones. Technically, the product I was listening to is a PSAP, or personal sound amplification product: the Nuheara IQbuds2 Max, which I’m measuring for a technical publication along with some other PSAPs. The Nuheara IQbuds2 Max earbuds ($399 USD) are basically a set of true wireless earbuds with features added for hearing enhancement. Like many audio products that have come before them, the IQbuds2 Max earbuds seek to adapt their output to best suit the listener’s hearing characteristics. The difference is that this product actually seems to work.

As I see it (or hear it), the problem with technologies that optimize their performance for a listener’s hearing is that they’re only as good as their target curve -- the idealized, perceived frequency response curve they’re trying to achieve. It might seem that deciding on a target curve is simple: just make it flat. But with headphones and earphones, what’s “flat”? Is it the Harman curve? If so, which of the three different ones? Or is it the curve Sonarworks used for its True-Fi app? And while those curves have been shown to work well for music, do they work as well for enhancing dialogue clarity, which is the purpose of a PSAP? And how do you overcome the fact that what you’re hearing through your unaided, imperfect ears is your reference, and any attempt to correct that may sound unnatural?

Unfortunately, based on my experience with several smartphone apps and a few headphones/earphones that test the user’s hearing and apply a correction curve, it appears to me that many of the target curves for these products were created based on the general knowledge of human hearing that an audio product designer might possess, rather than the expertise and deep scientific knowledge that a PhD in audiology would have. As I learned when I started to research PSAPs and hearing aids, hearing correction is an entirely different field from audio, with its own publications and science that would be unfamiliar to most readers of the Journal of the Audio Engineering Society (like me).

At this point I should note that, like almost all 58-year-old males, I have some hearing loss, as I found when I had my hearing tested last year: no significant hearing above 13kHz, and a mild “noise notch” between 3 and 6kHz in my left ear. This isn’t enough to benefit significantly from hearing assistance; in a more recent hearing test in May, conducted online for another PSAP I’m testing, the audiologist (who hadn’t been told much about the project) fretted that I didn’t have enough hearing loss to be able to evaluate a PSAP. (“Just crank the correction way up so he can hear it,” the engineer from the manufacturer instructed.)

So I have some idea of what a hearing test and correction algorithm should do for me: moderate mid- and high-frequency correction in my left ear, and just a little bit of high-frequency boost in my right. But so often, what I’ve gotten from apps is either no appreciable result or way too much high-frequency boost, like you may have heard from dialogue-boosting EQ curves in soundbars and TVs.

Considering it took about a decade for the audio industry to get to the point where most of the room-correction algorithms included in audio/video receivers work reasonably well, it shouldn’t have surprised me that most of the hearing-correction algorithms in smartphone apps and headphone/earphone products didn’t work well for me. But I’m glad I found one that did.

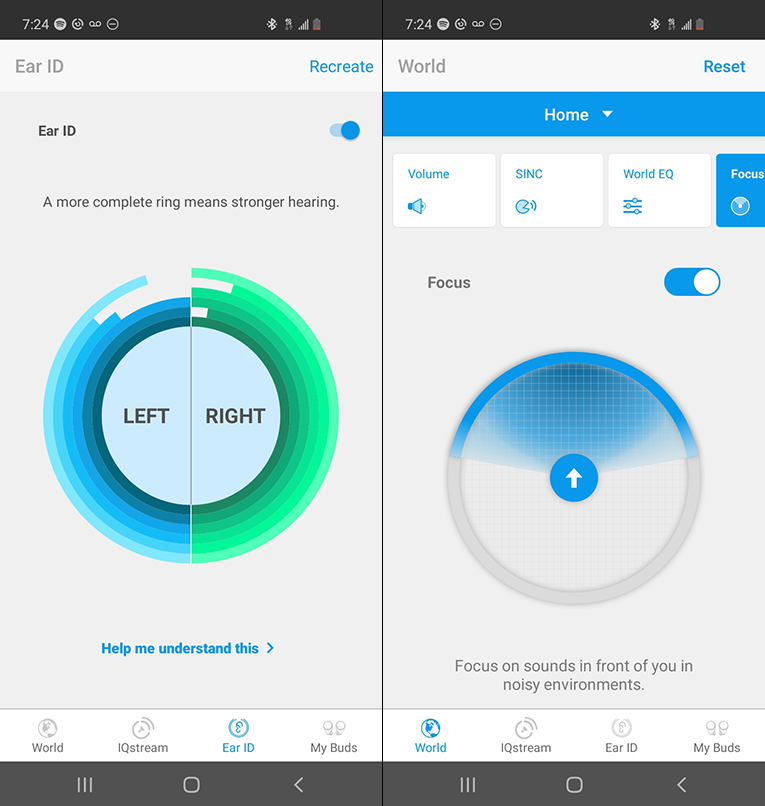

As in the hearing tests an audiologist conducts, the IQbuds2 Max app plays tones of different frequencies at various levels to determine the threshold of audibility for each of those frequencies in each ear. I’d guess it took somewhere between five and ten minutes. Immediately after taking it, I leashed up my dog, headed off for a walk, and started listening to some favorite jazz and pop tunes.

After walking a couple of blocks, I started to think “These sound really good!” That surprised me because most PSAPs do not sound good with music. Curious how much of a role the correction curve played in this, I pulled up the app and turned the correction off. I heard exactly what I should have heard with the hearing correction deactivated: a slight decrease in high frequencies, and a little loss of upper midrange and lower treble detail in the left ear. It didn’t overcorrect or undercorrect; it got things just right. I later noticed that the app includes a diagram that roughly shows how much and at what frequencies the app corrects the response for each ear.

There are all sorts of enhancements available in the IQbuds2 Max that can aid hearing: adjustable boost in the speech band, adjustable rejection of ambient noise, and the ability to focus on sounds coming from in front of you while rejecting sounds from behind. But that’s outside the purview of SoundStage! Solo. What’s important here is that the IQbuds2 Max earbuds show that this technology can be just as effective for music reproduction as it is for speech enhancement -- especially considering how many audio enthusiasts are males in their ’50s, ’60s, or ’70s.

Considering that headphones with app-controllable digital signal processing have become so common, I think it’s inevitable that we’ll see more -- and more effective -- hearing correction built into headphones and earphones. When done right, it’ll deliver a tremendous benefit for millions of listeners. But based on what we saw in the development of room-correction algorithms, I expect we have several years to go before most companies get it right.

. . . Brent Butterworth