Of the more than 100 new audio products that pass through my home every year, few stay longer than a couple of months. But after I tested a sample of the AKG K371 headphones borrowed from another reviewer, I immediately ordered a set from Amazon. Not only do they sound great; they’re a superb reference for any reviewer or headphone enthusiast. More than probably any other headphones available today, they tell us what sound most listeners like. The K371s’ response closely matches the Harman curve, developed by running hundreds of headphones past hundreds of listeners in blind tests to find out what kind of sound most listeners prefer.

Although I respect the science behind the K371 headphones, I don’t have to trust it, because the results from my listening panelists -- who had no idea of the K371s’ pedigree -- confirm the headphones’ excellence. Based on the many glowing reviews I’ve read of the K371s, I expect they will become an industry standard, alongside the Sony MDR-7506 headphones -- which were launched more than a decade before the Harman headphone research began but happen to come very close to the recommended target response. The science worked.

The tuning of the K371s was developed using short-term, blind comparisons of different headphone response curves -- yet this same process is derided by most high-end audio reviewers and publications as insignificantly revealing of flaws. Their preference is for long-term, casual, sighted listening tests where few, if any, controls are in place. They’ve recently come up with a sexy marketing term for this: “slow listening,” perhaps inspired by a 2018 paper titled “On Human Perceptual Bandwidth and Slow Listening” by Thomas Lund and Aki Mäkivirta.

Lund and Mäkivirta cite various scientific references to show that the amount of information the human brain can take in over a short time interval is limited (a fact that should come as a surprise to no one). They imply that short-duration listening tests are inadequate, although the only purported failure of such tests that they mention is that they “have been used to promote lossy data reduction” -- and they present no specific examples of where, exactly, short-duration tests have failed to identify flaws in lossy data reduction technologies.

Although I’m an Audio Engineering Society member and an enthusiastic reader of its publications, I’d have missed this paper had it not been cited by Stereophile editor Jim Austin in a recent opinion piece that embraces the notion of “slow listening.” Now that the term has been touted in an influential audio publication, I expect its use to spread in audio reviews and forums -- and more ominously, in marketing efforts used to promote products whose performance falls short of modern standards.

Lund and Mäkivirta see the danger here, warning that “. . . repeatable procedures need to be established so ‘more testing time’ does not become a way of defending just any claim.” But “more testing time” has become the mantra of audiophiles and reviewers whose beliefs continually fail to be confirmed by short-term controlled testing. With “slow listening,” we now have a marketing phrase with an artsy, organic vibe to glamorize uncontrolled, casual listening tests in which no attempt is made to eliminate the effects of listener bias.

When you compare the results of short-duration blind tests with the results of “slow listening,” it’s clear which technique works and which doesn’t. The AKG K371 headphones are only the latest example of what short-duration blind listening tests can achieve. More importantly, short-duration blind tests gave us the speaker-design standards developed at Canada’s National Research Council.

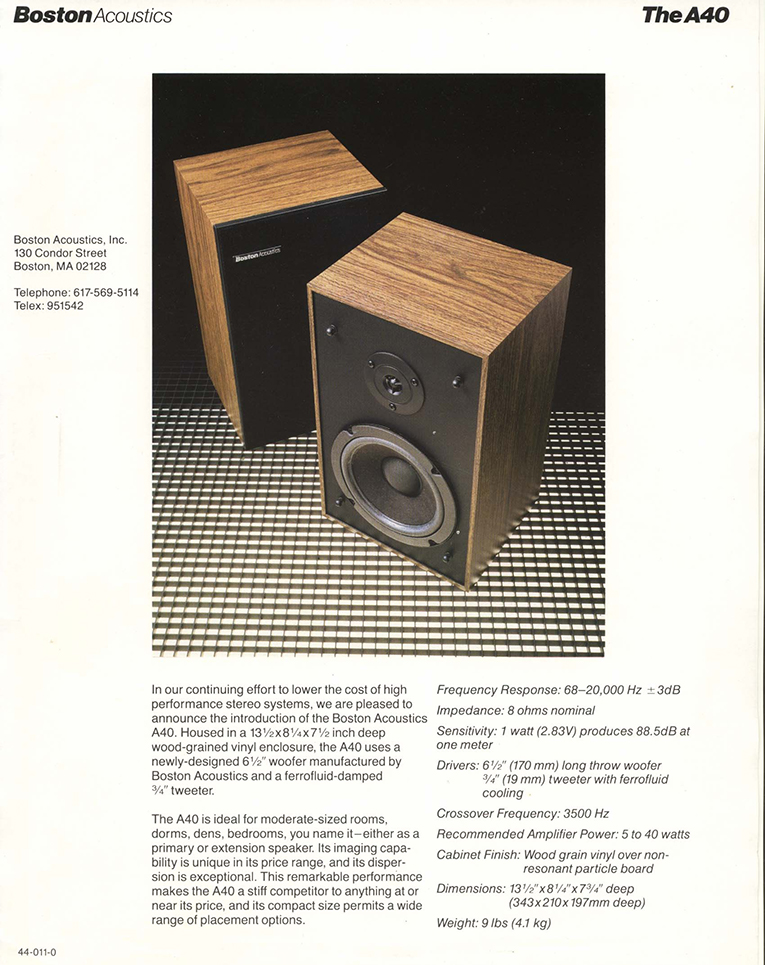

As anyone who followed the development of speakers in the 1990s can tell you, we began that decade with speakers as wildly different as headphones are now. I remember in 1991 asking veteran audio reviewer Len Feldman to recommend a good, cheap speaker, and the only one he could think of was the Boston Acoustics A40. As the results of the NRC research started to spread, speaker design improved radically. By the end of the decade, I noticed that the majority of the conventional speaker designs passing through my listening room delivered good, and often great, performance. I can now easily name a dozen speakers that are better than the A40 but sell for less even before adjusting for inflation.

While we often read claims of audio products being developed through innumerable listening tests over the course of months -- i.e., slow listening -- I can’t think of an example of such a product that delivers demonstrably better performance than competitors developed using short-duration blind tests.

Worse is that the slow-listening review process is now resulting in raves for products with obvious flaws that controlled, blind listening tests and measurements would quickly reveal. Examples include large single-driver loudspeakers with unavoidably narrow dispersion and severe colorations in the upper midrange and treble, and single-ended tube amplifiers with audible treble and bass roll-off as well as high output impedance that causes your speakers or headphones to deviate substantially from their intended response.

After 29 years of reviewing, and thousands of product reviews, I can certainly think of times when I uncovered some minor performance flaw in a product after long-term exposure to it. But every month I encounter new examples of short-duration comparison testing revealing flaws that I missed in casual “slow listening” sessions.

I hope someday someone will present substantial experimental data that shows multiple examples where long-term listening revealed something missed in short-duration blind tests. But until then, whenever I hear the phrase “slow listening,” I’ll get the impression someone’s trying to sell me something that can’t hold up to a serious, controlled evaluation.

. . . Brent Butterworth